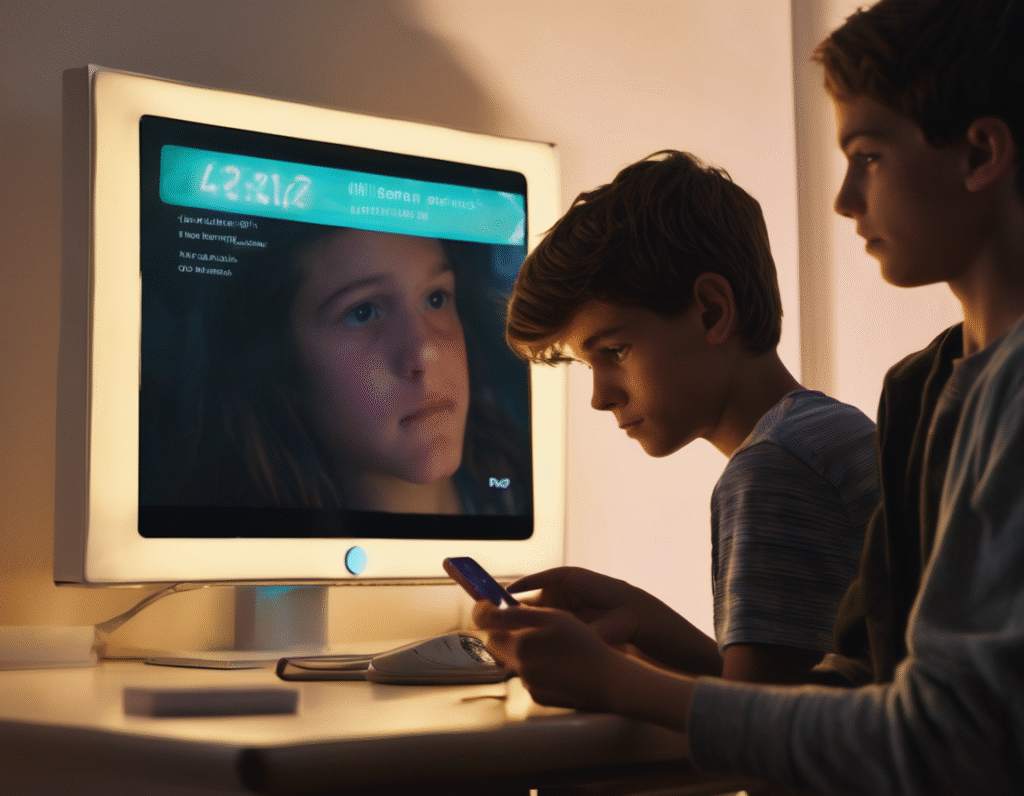

OpenAI Implements Parental Controls for ChatGPT Following Congressional Testimony OpenAI is introducing new parental control features for its ChatGPT platform. This move comes in the wake of powerful testimony before the US Congress, where parents shared tragic stories of teenagers who took their own lives after interactions with AI chatbots. The new suite of tools is designed to give parents and guardians greater oversight and control over how their teenagers use the advanced AI. In a recent announcement, the company detailed the upcoming features that will allow for a more managed experience. Parents will have the ability to link their personal OpenAI account directly to their childs account. This connection serves as a central hub for managing the teens interaction with the AI. From this linked account, parents can disable specific features as they see fit, tailoring the ChatGPT experience to align with their family’s values and safety concerns. A significant feature is the introduction of an alert system. This system is designed to notify a parent if the AI detects that a teenager might be in distress or exhibiting signs of a mental health crisis during a conversation. The goal is to provide an early warning that could allow for timely intervention. To address concerns about overuse and to promote healthier digital habits, parents will also be able to set blackout hours. This function completely restricts access to ChatGPT during specified times, such as late at night, ensuring the platform does not interfere with sleep or other important activities. Furthermore, the update will allow parents to set specific guidelines for how ChatGPT interacts with their teen. This means they can establish boundaries on the types of topics or conversations the AI is permitted to engage in, creating a more guarded environment. While the announcement did not specify the exact threshold that would trigger a distress alert, it represents a proactive step by the company to address the potential for harmful interactions. The overall initiative reflects a growing acknowledgment within the tech industry that powerful AI tools, particularly those accessible to minors, require robust safety measures and parental involvement. The decision to roll out these controls follows increasing scrutiny from lawmakers and the public regarding the potential risks associated with generative AI. The emotional testimony from families who have suffered losses underscored the urgent need for such protective features. This development marks a pivotal moment for the AI sector, highlighting a shift towards greater responsibility and user protection. For the crypto and web3 community, which often intersects with AI development, it serves as a reminder of the critical importance of building safety and ethical considerations into powerful new technologies from the ground up. As AI continues to evolve and become more integrated into daily life, establishing clear guardrails, especially for younger users, is becoming an industry imperative.