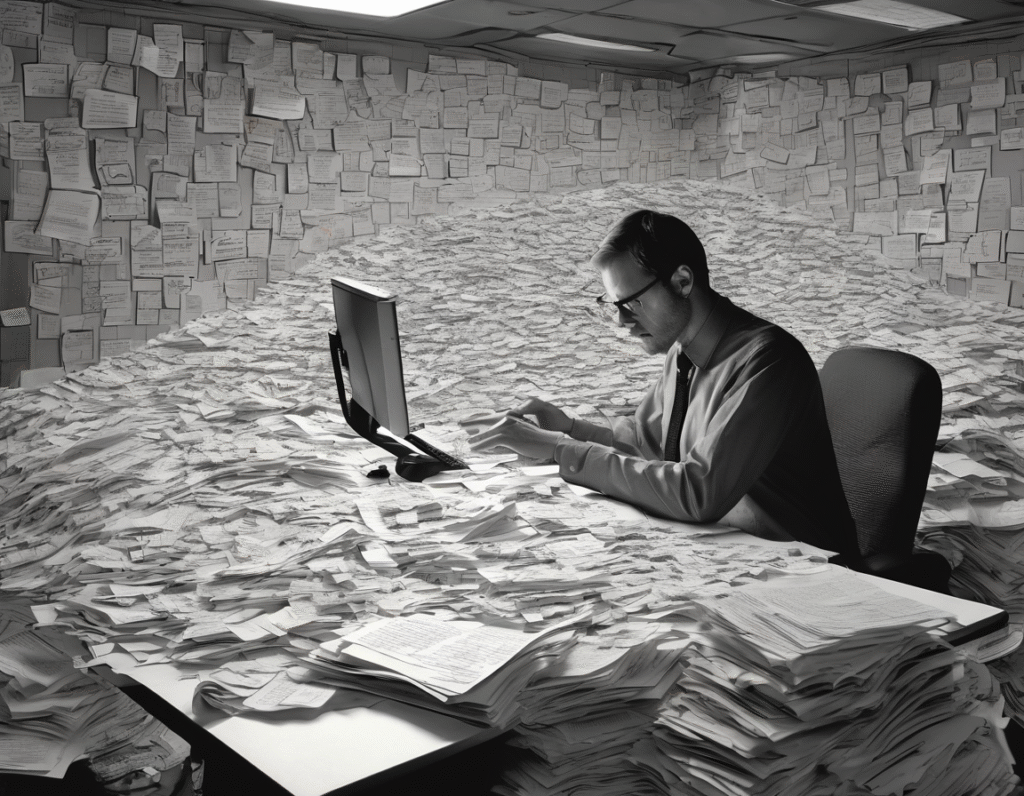

The Credibility Crisis How AI is Undermining AI Research A troubling pattern is emerging in the world of artificial intelligence. The very tools designed to accelerate discovery are now flooding academic and industry channels with low-quality, auto-generated research papers. Experts are raising alarms that the field is being poisoned by a deluge of synthetic slop, making genuine progress harder to identify and threatening the integrity of scientific discourse. The core of the issue is the reflexive and often undisclosed use of large language models. Researchers and students, under pressure to publish quickly, are increasingly using AI to draft or entirely compose papers. The result is a surge of content that is superficially coherent but often devoid of original insight, filled with factual inaccuracies, fabricated citations, and recycled ideas presented as novel. These papers are not pushing boundaries they are clogging the pipelines of knowledge with algorithmic noise. This creates a cascade of negative effects. Peer reviewers are overwhelmed by the volume, making it difficult to separate legitimate work from AI-generated filler. Conferences and preprint servers are becoming saturated, diluting the value of publication. Most dangerously, this slop entrenches existing biases and errors. Since AI models are trained on existing literature, they perpetuate and amplify flaws, creating a closed loop of declining quality where AI writes papers that then train the next generation of AI on corrupted data. For the crypto and Web3 community, this is a familiar and urgent warning. The space has long battled misinformation, hype-driven whitepapers, and low-quality content designed to farm attention or fuel speculative frenzies. The invasion of AI slop into research mirrors the problem of AI-generated crypto trading bots, shallow protocol analyses, and automated shilling that offer no real value. It underscores a critical principle trust and verification are paramount in any frontier technology. The solution is not to reject AI tools outright, but to enforce rigorous standards of transparency and human oversight. The academic and tech industries must urgently adopt clear policies requiring disclosure of AI use in research. Stronger verification processes, perhaps leveraging blockchain-based timestamping and proof-of-human-origin, could help authenticate genuine contributions. The focus must shift from quantity to quality, rewarding rigorous work over rapid, automated publication. The irony is profound. A tool of immense potential is being used to erode the very foundation of progress it was meant to build. If left unchecked, the flood of AI slop risks creating a broken system where finding a signal in the noise becomes a near-impossible task. The field of AI now faces a meta crisis it must solve the problem of its own tools undermining its credibility, or risk consuming itself from within.