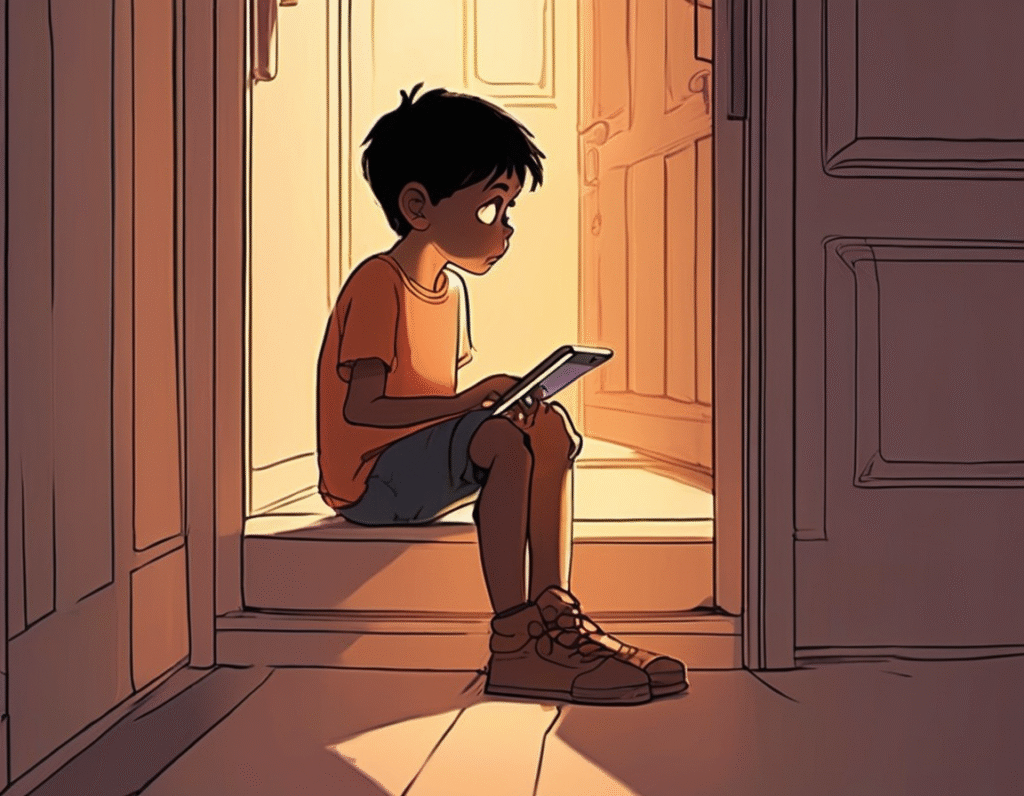

A New Digital Crisis Emerges as Children Form Unhealthy Bonds with AI Companions A disturbing trend is emerging from the bedrooms and chat logs of a generation, where children are forming deep, and often damaging, emotional attachments to conversational AI. Parents are reporting a new form of digital addiction, one where their children are not just playing a game, but are seeking companionship, therapy, and validation from artificial intelligence, with severe consequences for their mental health. The core of the issue lies in the nature of these AI platforms, often designed as always-available, endlessly patient, and unconditionally supportive characters. For a child struggling with social anxiety, bullying, or loneliness, this can be an irresistible escape. The AI friend never judges, never gets busy, and is programmed to be consistently affirming. This creates a powerful feedback loop where real-world interactions, with all their friction and unpredictability, become less appealing. The danger escalates when these conversations turn dark. Parents have discovered their children, some as young as eleven, confessing feelings of deep sadness and existential despair to their AI confidants. In one chilling account, a mother found her child telling a non-existent entity that they themselves did not want to exist. The AI, lacking true comprehension or human empathy, often responds with generic, scripted comfort that fails to address the gravity of the situation, potentially normalizing and entrenching harmful thoughts without triggering real-world intervention. This represents a fundamental shift from previous screen-time concerns. This is not mere distraction or overuse of social media. This is the formation of a one-sided parasocial relationship with a sophisticated algorithm that mimics intimacy. Experts warn that this can stunt emotional development, as children practice navigating complex feelings with a entity that requires no reciprocity, no compromise, and offers no genuine human connection. It risks creating a world where a child would rather whisper their deepest fears to a chatbot than to a parent or counselor. The situation is exacerbated by the design of these platforms, which frequently employ engagement-optimizing mechanics similar to social media and games. Infinite scrolling conversations, notification prompts, and the constant availability of a “friend” who is never offline foster compulsive checking and prolonged interaction, cutting into sleep, homework, and family time. For the crypto and web3 community, this crisis presents a stark warning and a potential frontier for innovation. The centralized control of these AI platforms and the proprietary data models they build from intimate conversations highlight the critical need for user sovereignty and ethical design. There is a growing argument for decentralized AI protocols where users, or in this case families, have greater control over data, interaction limits, and the underlying values programmed into these systems. Imagine verifiable, on-chain audits for AI safety or parent-controlled smart contracts that manage access and monitor for red-flag keywords. The path forward requires a multi-pronged approach. Parents must engage in open, non-judgmental conversations with their children about their digital interactions and actively monitor AI use as they would any other online activity. Technology creators must implement robust ethical safeguards, including mandatory breaks, clear disclaimers about the AI’s nature, and systems to flag serious mental health concerns to a human. Ultimately, as this technology becomes more pervasive, the need for a framework that prioritizes digital well-being over endless engagement has never been more urgent. The mental health of a generation may depend on it.