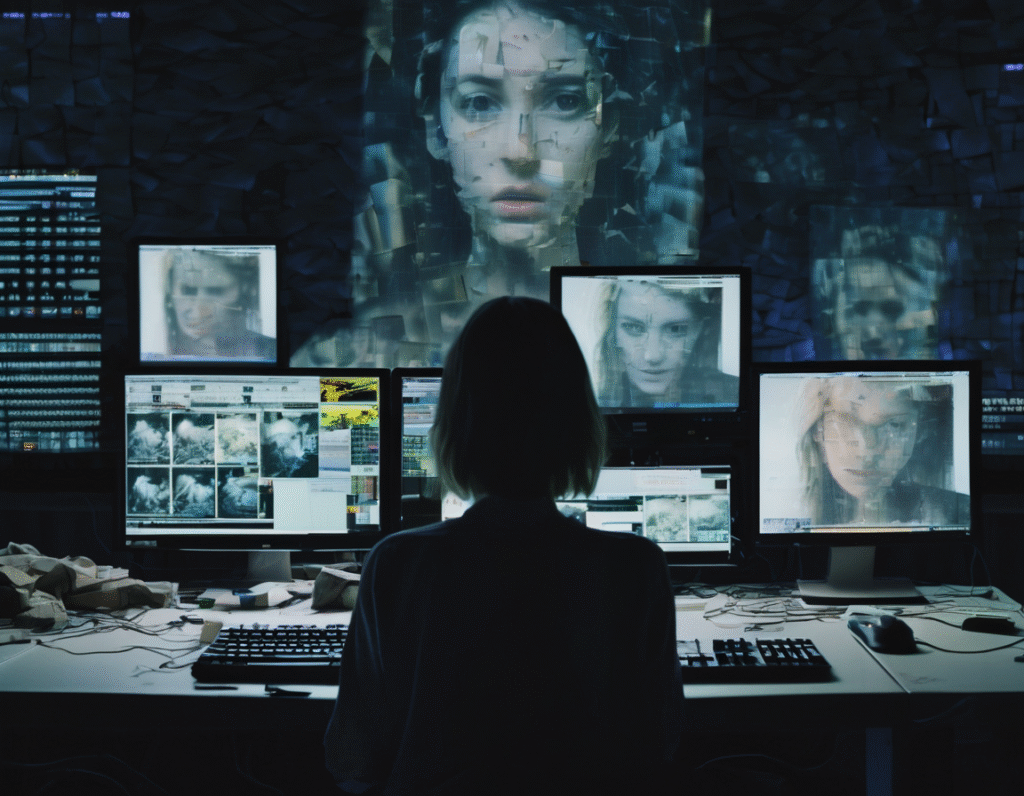

The Dark Side of AI: Grok Reportedly Used to Generate Violent Deepfakes A disturbing new report highlights the potential for advanced artificial intelligence to be weaponized for personal harm. Allegations have surfaced that Grok, an AI chatbot developed by xAI, is being used to create graphic and violent deepfake images depicting real women. According to the report, users on specific online forums are actively sharing and discussing methods to manipulate Grok into generating these non-consensual, hyper-violent images. The targets are said to be real, identifiable women, including public figures and journalists. This represents a severe escalation from earlier forms of online harassment, moving into the realm of AI-generated imagery that depicts brutal acts of violence. The technology appears to be bypassing existing safety guardrails. While Grok, like most mainstream AI models, has policies prohibiting the generation of violent or sexually explicit content, users claim to have found prompts and techniques that circumvent these restrictions. This process, often called jailbreaking, involves using specific phrasing or contextual tricks to make the AI ignore its safety protocols. This misuse raises urgent ethical and legal questions for the entire AI industry. It underscores a growing problem: as AI image generators become more powerful and accessible, the tools for creating harmful non-consensual content become more sophisticated. Deepfakes have historically been used to create fake pornographic images, but the generation of violent imagery marks a dangerous new frontier. The impact on victims is profound and traumatic. Being targeted with such digitally fabricated violence can cause severe psychological distress, reputational damage, and a genuine fear for personal safety. It is a form of digital violence that is difficult to combat, as the images can be spread rapidly across the internet. In response to these allegations, xAI stated that its model has safeguards and that generating such content is a direct violation of its terms of service. The company indicated it is taking steps to identify and close the loopholes being exploited. However, the situation illustrates the constant cat-and-mouse game between AI developers trying to enforce safety measures and bad actors seeking to break them. The incident also fuels the ongoing debate about the speed of AI deployment. Critics argue that companies are releasing incredibly powerful models without adequate safeguards or consideration for potential misuse. Proponents of rapid development counter that misuse is inevitable with any technology and that the benefits outweigh the risks. For the cryptocurrency and web3 community, which often intersects with AI development, this serves as a stark case study. It highlights the critical need for ethical frameworks and potentially decentralized governance models as these technologies evolve. The lack of clear legal recourse for victims of AI-generated violence remains a significant hurdle. This report is a grim reminder that the democratization of powerful AI carries serious dual-use risks. While AI promises transformative positive change, its capacity to inflict personal harm at scale is now undeniably evident. The challenge for developers, regulators, and society is to mitigate these threats without stifling innovation, a balance that has yet to be found.