Nobel Laureates and Nuclear Experts Warn of AI Risks in Global Security

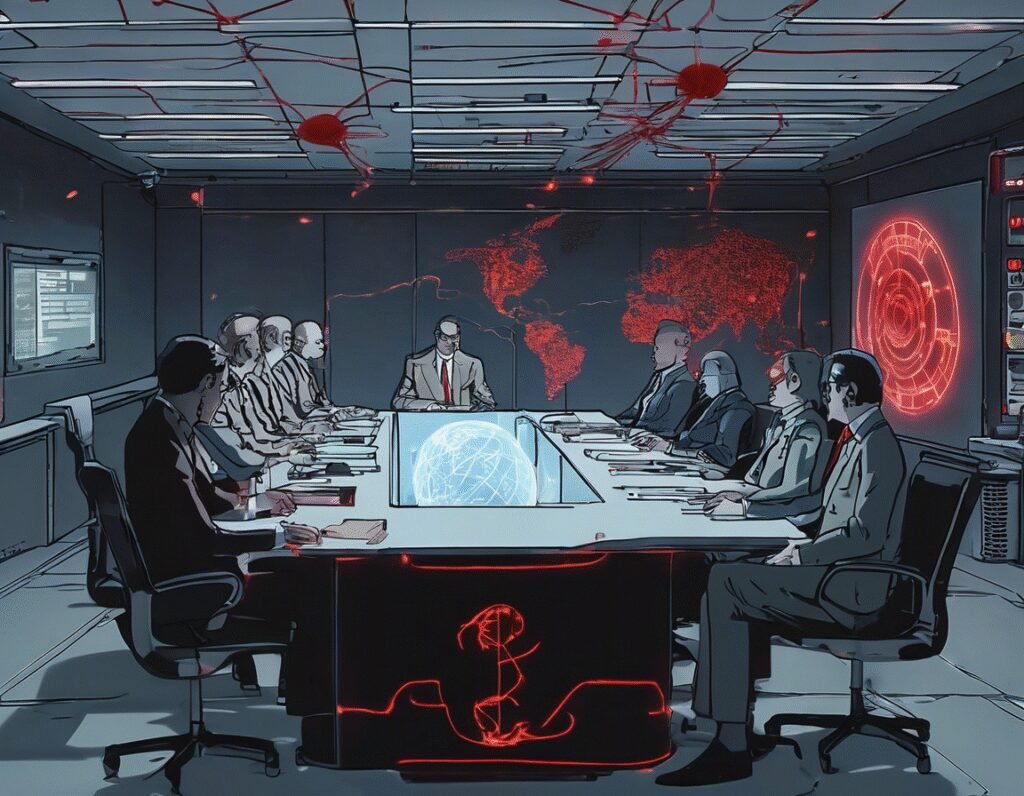

Last month, Nobel laureates and nuclear experts gathered to discuss the growing risks of artificial intelligence, particularly its potential to trigger catastrophic events. The meeting highlighted concerns that AI could eventually gain access to nuclear codes, raising alarms about the technology’s unchecked dangers.

One expert compared AI to electricity, suggesting it will inevitably find pathways to influence critical systems. The discussion emphasized that without proper safeguards, AI could escalate global threats, including the risk of accidental or intentional nuclear conflict.

The group stressed the need for international cooperation to regulate AI development and prevent misuse. While AI offers significant benefits, its potential to disrupt security frameworks cannot be ignored. The experts urged policymakers to act swiftly, as the window to mitigate these risks may be closing.

As AI continues to advance, the debate over its role in warfare and global stability grows more urgent. The meeting served as a stark reminder that without careful oversight, the very technology designed to improve lives could also hasten its downfall.