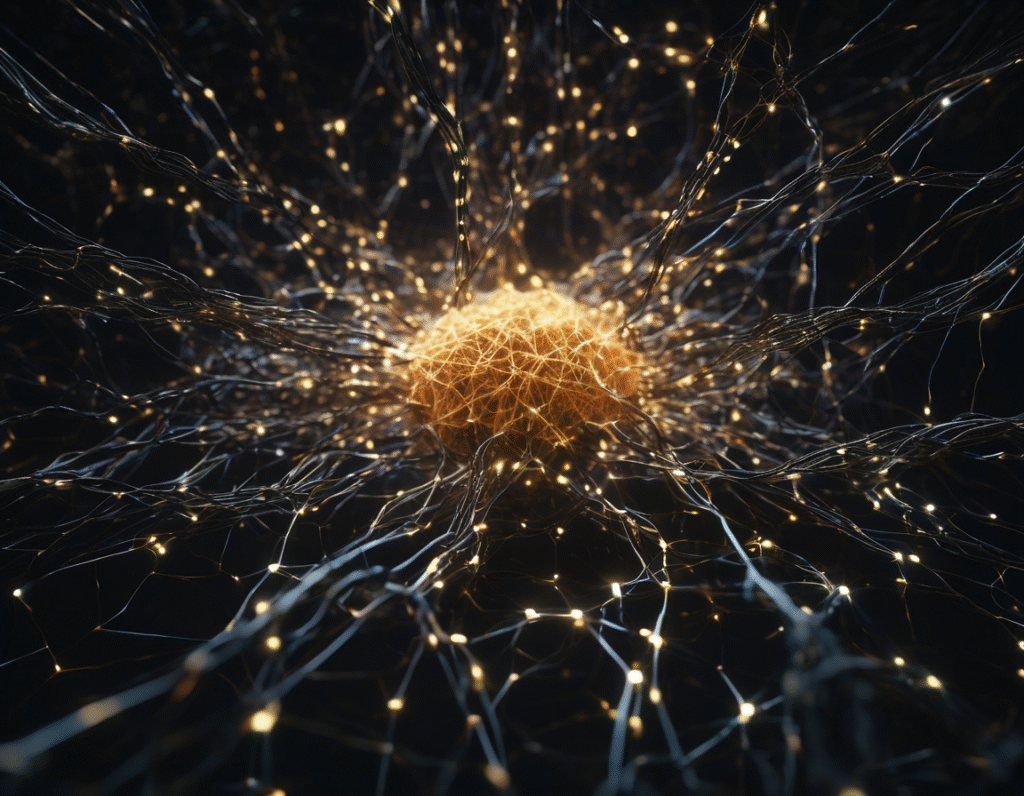

OpenAI’s Latest AI Was Created Using Itself, Company Claims In a development that seems pulled directly from a science fiction novel, OpenAI has revealed that its newest artificial intelligence model was created with significant assistance from its own predecessors. The company claims this recursive self-improvement marks a pivotal step toward more advanced and capable AI systems. The process, as described, involved using earlier versions of OpenAI’s AI to generate synthetic data and perform tasks crucial to training the new model. This includes evaluating AI responses, brainstorming, and even assisting with the initial coding. Essentially, the company used AI to help build a more powerful AI, creating a feedback loop of technological advancement. This method represents a shift from traditional AI training, which relies heavily on vast datasets of human-generated text and human trainers. By leveraging AI to shoulder more of the development burden, the process can theoretically accelerate and scale in ways previously limited by human bandwidth. For the crypto and Web3 community, this announcement is a seismic event. The concept of recursive self-improvement is a core component of the singularity hypothesis, a theoretical future point where technological growth becomes uncontrollable and irreversible, leading to unforeseeable changes for human civilization. The idea that a leading AI lab is actively employing such techniques brings that speculative future into sharper, more immediate focus. The implications for blockchain and decentralized systems are profound. AI capable of rapidly iterating and improving upon itself could revolutionize smart contract development, security auditing, and protocol design. It could create more efficient and complex decentralized autonomous organizations (DAOs) or devise entirely new cryptographic mechanisms. The potential for innovation is staggering. However, this power is coupled with deep and urgent concerns. A self-improving AI introduces unprecedented risks. In a crypto context, an advanced AI could potentially exploit vulnerabilities in smart contracts or blockchain protocols at a speed and scale impossible for humans to counter. The promise of hyper-efficient markets and systems is shadowed by the threat of hyper-intelligent exploits. Furthermore, this development intensifies the centralization debate. Building such powerful technology requires immense computational resources and capital, concentrating potential in the hands of a few large entities like OpenAI. This stands in stark contrast to the decentralized ethos of cryptocurrency. The question arises: will the future of intelligence be an open, permissionless protocol, or a closed product controlled by a corporation? The news also forces a reevaluation of the crypto industry’s own trajectory. Projects aiming for artificial general intelligence (AGI) on blockchain must now contend with the rapid, centralized progress of incumbents. It underscores the need for robust, decentralized governance models to guide the integration of increasingly autonomous AI into our financial and social systems. OpenAI’s achievement is a clear signal that the pace of AI advancement is not slowing; it may be accelerating in a self-reinforcing cycle. Whether this is the first step toward a true singularity remains a matter of fierce debate. But it undoubtedly marks the beginning of a new era where AI is not just a tool we build, but an active participant in its own creation. For a world built on code and algorithms, that changes everything. The race is no longer just between developers, but between the technologies they are now, in part, handing the reins to.