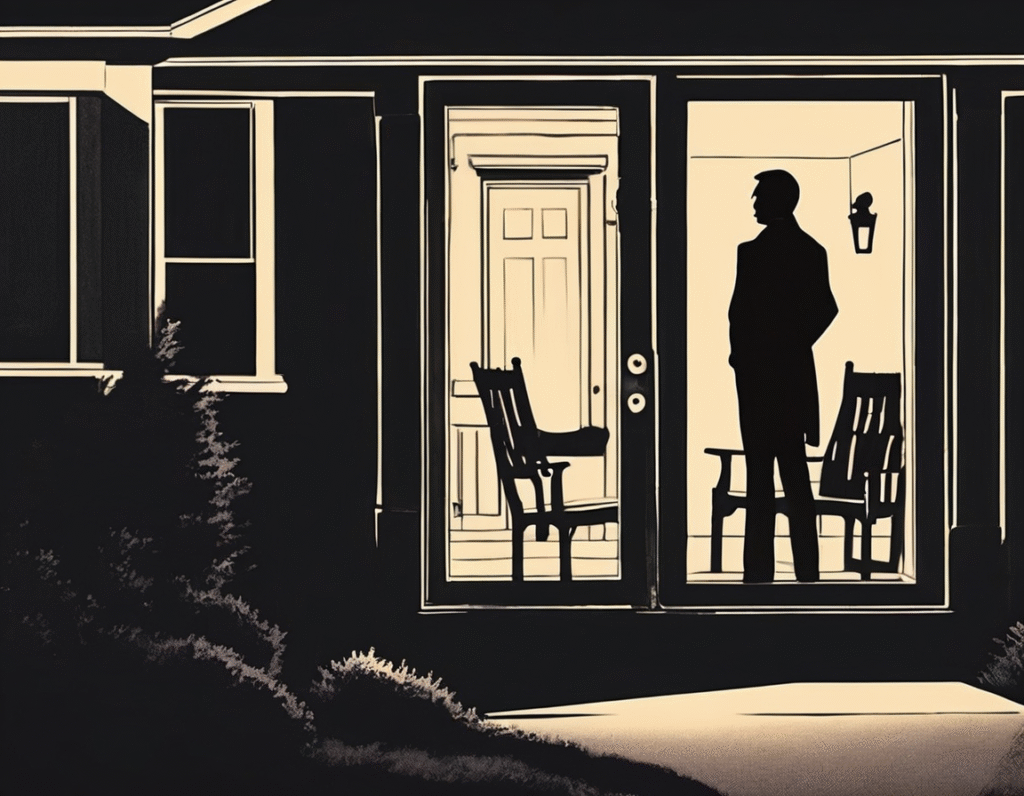

OpenAI Sends Representatives to Critics Homes in Aggressive Tactics A new and unsettling tactic is being reported in the ongoing debate over artificial intelligence safety. Representatives from OpenAI, the company behind ChatGPT, have reportedly been visiting the personal homes of critics and former employees. These visits are described as confrontational, with the visitors making threats and demands regarding the critics public statements. The situation highlights the extreme power imbalance at play. One source described the fear of knowing that one of the worlds most powerful and valuable private companies has your home address, has shown up unannounced, and is pressuring you to retract your views. This move is seen as an escalation beyond legal letters or public relations campaigns, taking disputes directly to a persons private doorstep. The individuals being visited are often those who have raised significant concerns about AI safety and the internal culture at OpenAI. They argue for more transparency and slower, more careful development of powerful AI systems. The home visits appear to be an attempt to silence this criticism through intimidation. This aggressive strategy raises serious ethical and legal questions. While companies often defend their intellectual property and public image, using personal visits to private residences crosses a line for many observers. It creates an atmosphere of fear and sends a chilling message to other potential whistleblowers or critics within the tech industry. The context makes these actions particularly alarming. OpenAI is a leader in a field that many experts believe poses existential risks to humanity. The company handles vast amounts of sensitive data and is developing technology that could reshape society. Critics argue that such a company should be subject to intense public scrutiny, not operate with a culture of secrecy and retaliation. The reported incidents suggest a pattern of attempting to control the narrative by any means necessary. For a company whose mission statement once centered on ensuring that artificial general intelligence benefits all of humanity, these heavy handed tactics seem contradictory. They point to a shift towards a more corporate, defensive posture as the stakes and commercial pressures have grown. This news arrives amidst broader sector wide concerns about the concentration of AI power in a few unaccountable corporations. The crypto and web3 communities, which often champion decentralization and transparency, are watching these developments closely. The centralized control of foundational AI models by a handful of companies is seen as a critical point of failure, both for innovation and for safety. The tactic of home visits could ultimately backfire. Instead of quieting critics, it may draw more attention to their warnings and validate concerns about the companys culture. It reinforces the argument that powerful AI developers cannot be trusted to self regulate and that independent oversight is urgently needed. For the broader tech ecosystem, this is a cautionary tale. It underscores the importance of building systems with transparency and accountability from the start, principles that are core to the blockchain world. As AI continues to evolve, the methods used to manage its discourse and dissent will be just as important as the technology itself. The future of this powerful technology may depend on ensuring that criticism is met with dialogue, not intimidation at ones front door.