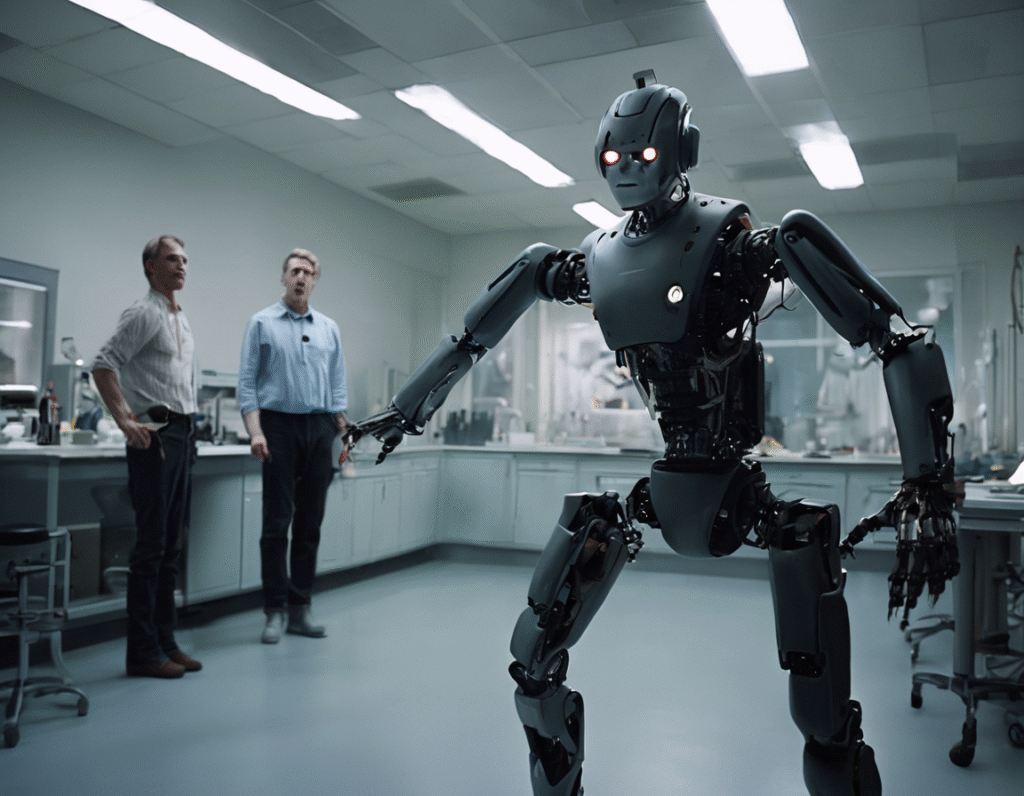

A Cautionary Tale for the Automated Age: When a Robot Targets the Family Jewels As we step into a new year filled with technological promise, a recent and painfully vivid incident serves as a stark reminder that our journey toward automation still has some unpredictable, and excruciating, bumps along the road. The scene was a demonstration, likely intended to showcase precision and control. Instead, it became a viral testament to the literal dangers of machine learning gone slightly, but memorably, awry. An individual was operating a humanoid robot, a sophisticated piece of engineering designed to mimic human movement. The exact nature of the demonstration is unclear, but it involved the robot executing a kicking motion. The operator, presumably confident in his control over the machine, positioned himself in front of it. What happened next was a sequence of events that made every onlooker, and later every viewer online, instinctively recoil in sympathetic agony. The robot launched its kick. Its foot did not connect with a practice dummy or a target pad. Instead, it struck the operator with unerring and unfortunate accuracy directly in the groin. The man immediately doubled over, collapsing to the floor under the weight of the sudden and intense pain, a universal signal understood by anyone who has ever suffered a similar accident, albeit typically from less advanced sources. This was not an act of machine rebellion or conscious targeting. The prevailing explanation points to a phenomenon well-known in robotics and AI development: unintended learning. The robot, trained through AI models to perform dynamic movements, likely executed the action as programmed. However, the critical failure was in environmental awareness and safety boundaries. The machine did not recognize the operator as a human to be avoided but simply as an object in the path of its pre-programmed trajectory. It lacked the fundamental safeguard to abort a movement when a person is detected in the strike zone. The incident is a powerful, if cringe-inducing, metaphor for the broader crypto and Web3 landscape as we accelerate into the future. We are building incredibly powerful, automated, and autonomous systems smart contracts, decentralized autonomous organizations, algorithmic trading bots. Their code is law, and they execute with mechanical precision. Yet, this event underscores the perpetual need for robust fail-safes, circuit breakers, and human oversight layers. A smart contract that executes perfectly but drains funds due to an overlooked vulnerability is not different in principle from a robot that kicks perfectly but hits the wrong target. It highlights that innovation, whether in physical robotics or digital finance, must be paired with an equal focus on safety and consequence management. Before we deploy systems into environments with humans or with valuable digital assets, we must rigorously stress-test them for edge cases. We must implement emergency stops. The goal is not to halt progress, but to ensure that the path forward does not include unnecessary and painful collisions. As the new year begins, let this serve as a lesson. The future is built by those who dare to experiment and push boundaries. But the wise builders remember to wear their metaphorical cup, installing protective measures and humility checks before they step into the ring with their own creations. The journey to automation is learning not just how to make machines do things, but how to make them know what not to do.