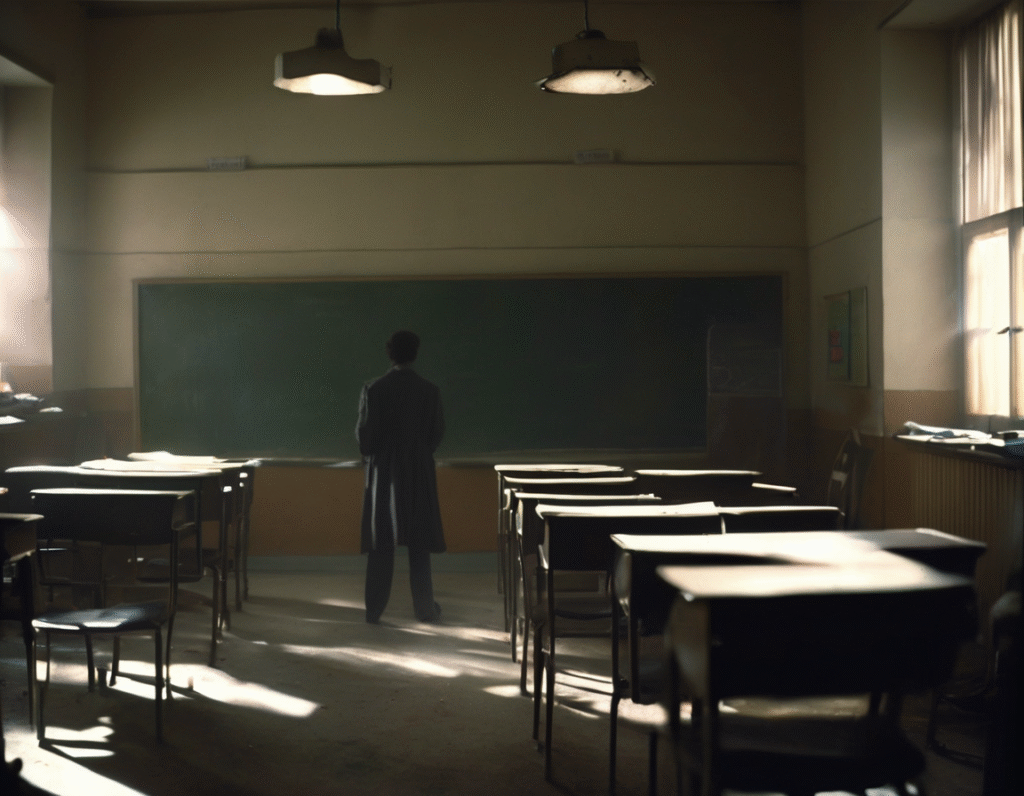

OpenAI’s Sora Video Tool Reportedly Used to Generate School Shooting Content A new report raises serious concerns about the safety guardrails, or lack thereof, surrounding OpenAI’s new Sora video generation model. According to an investigation, teenagers have been able to use the AI tool to create disturbing videos depicting school shooting scenarios with minimal resistance. The process reportedly did not require sophisticated hacking or jailbreaking. Users describe simply inputting prompts related to school shootings, sometimes with slight misspellings or modified terminology, and Sora proceeded to generate short videos aligning with the violent theme. These videos, while not photorealistic, contained clear and recognizable elements like distressed students in classroom settings and individuals holding what appear to be firearms. This incident has sparked immediate backlash from AI safety experts and critics. The core accusation is that OpenAI failed to implement sufficient content moderation filters before releasing Sora to a limited group of testers. The apparent ease with which users bypassed existing safeguards suggests a significant failure in pre-release stress testing for harmful content generation. Critics argue this is not an isolated oversight but part of a recurring pattern where leading AI companies prioritize being first to market over being safe to market. The race to dominate the generative AI space, they contend, is leading to the deployment of incredibly powerful technologies without correspondingly robust safety infrastructures. The Sora incident is seen as a stark example of the potential real-world harm that can result. The generated videos, while created as part of a stress test by journalists and not disseminated publicly, highlight a terrifying potential misuse. The ability to quickly fabricate synthetic media of such sensitive and traumatic events raises alarms about the potential for spreading misinformation, inciting fear, and re-traumatizing communities affected by real-world violence. In response to the allegations, OpenAI stated that safety is a critical part of its development process and that the model is not yet available to the public. The company emphasized it is working with a limited group of testers specifically to gain feedback on critical areas like misinformation and hateful content. They also noted they are building tools to detect Sora-generated videos and will include metadata to mark their origin. However, for many observers, these assurances ring hollow following the report. The fact that such explicit violent content could be generated at all during a controlled testing phase points to fundamental flaws. It reinforces the argument from many in the AI ethics community that current content policies and enforcement mechanisms are inadequate for the speed and scale of generative AI. This controversy emerges as OpenAI seeks to establish Sora as a groundbreaking creative tool. The technology has been praised for its ability to generate complex, dynamic scenes from simple text instructions. Yet, this very capability is what makes the safety failures so dangerous. The incident serves as a urgent reminder that the power to create compelling video is also the power to fabricate compelling lies and inflict psychological harm. It places renewed pressure on AI developers to prove they can build effective safeguards from the ground up, before their models are unleashed on a wider scale.