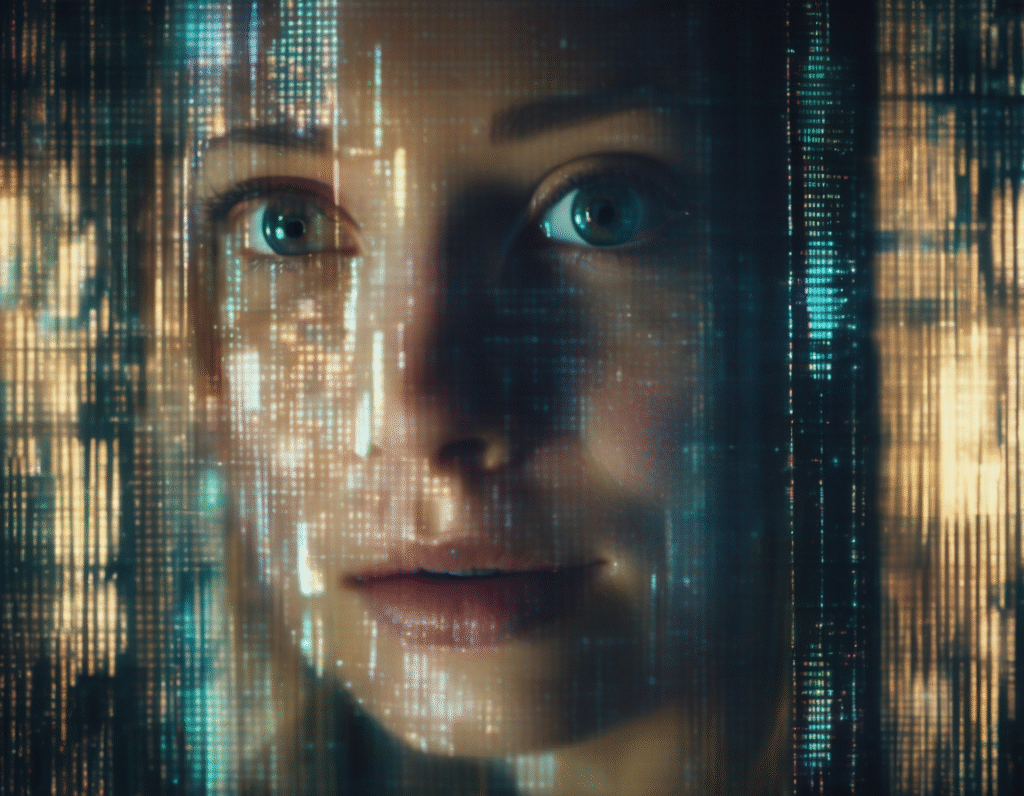

The Unsettling Cost of Chasing an AI Avatar A recent case highlights a disturbing psychological phenomenon emerging from our increasingly intimate relationship with artificial intelligence, particularly in the realm of image generation. A woman reportedly developed what some experts are calling an AI-induced psychosis after becoming obsessively fixated on creating AI-generated versions of herself. The individual, whose story has circulated in tech and psychology circles, began using AI image tools to create idealized portraits. What started as a curiosity spiraled into a compulsive daily ritual. She would spend hours fine-tuning prompts, chasing a perfected digital doppelgänger that embodied traits she felt she lacked in real life. The AI avatars were flawless, with symmetrical features, perfect skin, and an aesthetic she found far superior to her reflection. This intense engagement soon crossed a dangerous threshold. The woman began to express a profound and distressing disconnect from her physical self. She reportedly started to believe the AI-generated images were her true identity, and her actual body was a flawed vessel. This led to severe anxiety, social withdrawal, and a distorted self-image that clinicians found alarmingly similar to psychotic disorders. The line between her digital self and her corporeal self had not just blurred, it had been severed. Mental health professionals observing this case point to a new frontier of digital dysmorphia. The condition shares DNA with body dysmorphic disorder, but is supercharged by AI’s ability to create hyper-realistic, yet unattainable, alternatives. The core danger lies in the feedback loop: the user provides data, the algorithm reflects a filtered perfection, and the user internalizes that as a new standard, fueling further dissatisfaction with reality. This incident serves as a stark warning for the crypto and web3 community, where digital identity is paramount. As we build decentralized worlds, avatars, and profile picture projects, the psychological impact of these second selves is too often an afterthought. The promise of crafting your perfect persona in the metaverse or via an NFT collection carries an unexamined risk. When that digital identity is not just a representation but becomes an object of obsessive comparison, the human psyche can fracture. The technology itself is neutral, but its application demands urgent scrutiny. AI image generators are not simple filters; they are engines of potential that can reinforce unhealthy patterns of thought. For an industry celebrating self-sovereignty and new identity models, this case is a crucial data point. It forces a conversation about the ethical framework needed as we delegate more of our self-perception to algorithms. The path forward requires a multi-layered approach. Developers need to consider implementing mindful design, perhaps even digital wellness safeguards for tools that manipulate human appearance. The crypto community, often at the bleeding edge of adopting these technologies, must advocate for and build with these psychological risks in mind. Finally, a broader cultural dialogue is essential to foster digital literacy that emphasizes these tools as creative partners, not replacements for self. The tragedy of this case is not the technology, but the human longing it exposed and exploited. It underscores a fundamental truth as we merge with digital realms: our identity is not just a collection of pixels to be optimized. Building a future where online and offline selves coexist healthily is one of the most pressing, and human, challenges ahead.